Written by Patricia Lacroix.

AI is inescapable. It’s in the news, on our social feeds, and integrated into the software, devices and apps we already use. Cropping up daily and promising to change the way we work. These new AI advancements are powerful, but they are just that, new. Not all the bugs have been worked out yet.

Some tools are amazing, others not so much. Some are so complex that learning to use them is so overwhelming that you might as well wait for the next iteration – which at this pace, could be tomorrow. But what do these tools have in common? To be useful they depend on our ability to be strategic and measured in their implementation.

Adopting this technology is a necessity in digital and creative advocacy where success depends on innovation. We’re always looking for new ways to engage, streamline and experiment for our clients. So, while our team sorts through the good, the bad and the automated – we’re taking stock of how AI impacts our work. Here are the five big questions our team is asking when it comes to automation and AI.

Can I frame this question differently?Am I asking the right questions?

The art of crafting prompts is integral to using AI tools well. As communicators, this is a new way for us to frame our thinking about projects, after all, tools are only as useful as the people who use them. We must consider if we’re asking the question in a way that will get the best and more useful, answer. How many ways can we ask it? How different are the results? Prompting AI to generate text is as much a thought exercise as it is a shortcut. We can’t use AI like a Google search engine unless we want to get results as random as Google search results (but without the sponsored content… for now).

This means thinking more creatively about content, facts and how we’re thinking about our subjects. Asking AI to list ways that airplanes contribute to climate change is going to result in a pretty general answer, with facts pulled from all over the world and the internet. Asking AI to create convincing arguments as to how company X is innovating to reduce the carbon emissions of flying commercial jets in Canada, in the format of social media posts, will get you specific, relevant information.

2. Is this even right?

While AI can help you go from blank pages to paragraphs of text in seconds, fact-checking can be more challenging than starting from scratch.

If you need to create five tweets based on one sentence an AI text generator might be the perfect shortcut. This seems low risk, most people won’t be able to tell your content is written by AI… unless you screenshot it with the ChatGPT logo and post it to your X/Twitter. Conservative MP Ryan Williams posted one such screenshot about Canada’s capital gains tax, with incorrect information that landed him in hot water.

The problem is that AI-generated content isn’t always right. It’s often wrong. So, while we may use AI to write a first draft, to protect a brand or your credibility it’s important to have a person review EVERYTHING before publishing. A person will make sure the right information is emphasized and check that it’s factual. But then the question is – was this really a shortcut?

3. Does anyone care about this content?

Sometimes the shiny new tech gets the better of us, and it’s tempting to use because it’s easy and it’s there. However, content for the sake of content is never a sound strategy. The rise of multi-platform and trending content leaves brands and organizations feeling like they must keep up. Generating random, inauthentic or off-brand content could tank your engagement, instead of drive it up. Most social media platforms now label images and text to indicate it was created with AI – to many that label may be an indication to keep on scrolling.

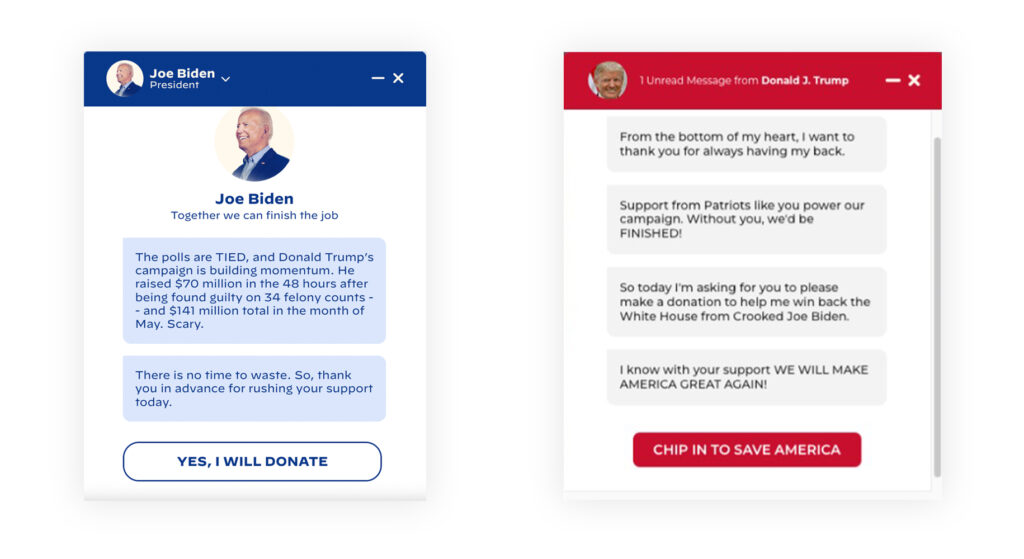

There’s a lot (A LOT) of content vying for your audiences’ attention. A chatbot may seem like a novel idea for a website, but does anyone really believe they are speaking to the real President Joe Biden, and if not, will it still have the intended impact? When it comes to audience trust and engagement, a headline with personality, a selfie video, or a newsletter written by a well-informed author might have the edge.

Ideas with human authenticity are often where the real magic is. Our team is always pushing to make engagement more meaningful. We want the specific people we connect with to feel invested enough to follow, support or return to the content. Lasting engagement and growth are a result of relevancy, not frequency.

4. Will people believe their eyes?

In 2024, online – the answer is often no. Generative image creation tools have advanced quickly. Design applications are full of features that magically add, remove and create with a click. As a result, people approach imagery online with skepticism these days. I find myself looking more carefully to spot the tells of an AI-generated image, especially when attached to news or political campaigns. Do the hands have five fingers? Are the landmarks in the correct setting?

The Guardian’s design team has committed to creating their election imagery by hand (like, with paper!) to combat the rise of fake news. This move towards slower, more labour-intensive design may seem counter-intuitive – but this gritty creative approach is very intentional, adding visual legitimacy to the journalism. A move towards the humanly imperfect and away from the synthetically polished may resonate more for some audiences when they have the same AI design tools at their fingertips that we do.

5. Is the juice worth the squeeze?

The upside – automating to reduce repetitive tasks means more time to think, creatively and strategically. It saves time for us and produces quicker results for our clients. But with the explosion of AI technology, it can feel like an endless rabbit hole of options. It means spending hours (and dollars) testing them out and training team members to use them. Swapping tried and true tools for new efficiency-boosting apps may throw your team for a loop if the platform adds a new tool or updates their software without warning. It’s a calculation of whether the time and money are worth it, and in most cases, the only way to know for sure is to be curious and try.

Our team is prioritizing time to explore, and test select areas of AI integration, without rushing to add every new hyped-up tool to our toolbox. We’re looking to enhance how we work, without undermining people’s strategic and creative expertise.

We do this by taking a flexible approach to AI and automation, with some ethical guardrails. For example, we disclose the use of these tools to our clients. We review AI-generated content and ensure no sensitive or proprietary information is input, checking everything for accuracy and authenticity. We’re experimenting with real use cases to see which tools are worth an investment, and which don’t live up to the hype. Most importantly, we’re having continuous conversations, and asking questions as we go.